问题如下:

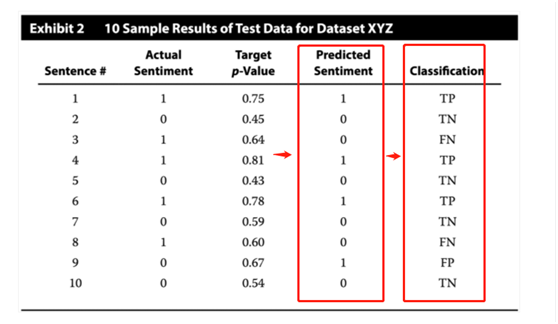

Azarov requests that Bector apply the ML model to the test dataset for Dataset XYZ, assuming a threshold p-value of 0.65. Exhibit 2 contains a sample of results from the test dataset corpus.

Based on Exhibit 2, the accuracy metric for Dataset XYZ’s test set sample is closest to:

选项:

A. 0.67

B. 0.70

C. 0.75

解释:

B is correct.

Accuracy is the percentage of correctly predicted classes out of total predictions and is calculated as (TP + TN)/(TP + FP + TN + FN).

In order to obtain the values for true positive (TP), true negative (TN), false positive (FP), and false negative (FN), predicted sentiment for the positive (Class “1”) and the negative (Class “0”) classes are determined based on whether each individual target p-value is greater than or less than the threshold p-value of 0.65. If an individual target p-value is greater than the threshold p-value of 0.65, the predicted sentiment for that instance is positive (Class “1”). If an individual target p-value is less than the threshold p-value of 0.65, the predicted sentiment for that instance is negative (Class “0”). Actual sentiment and predicted sentiment are then classified as follows:

Exhibit 2, with added “Predicted Sentiment” and “Classification” columns, is presented below:

Based on the classification data obtained from Exhibit 2, a confusion matrix can be generated:

Using the data in the confusion matrix above, the accuracy metric is computed as follows:

Accuracy = (TP + TN)/(TP + FP + TN + FN).

Accuracy = (3 + 4)/(3 + 1 + 4 + 2) = 0.70.

A is incorrect because 0.67 is the F1 score, not accuracy metric, for the sample of the test set for Dataset XYZ, based on Exhibit 2. To calculate the F1 score, the precision (P) and the recall (R) ratios must first be calculated. Precision and recall for the sample of the test set for Dataset XYZ, based on Exhibit 2, are calculated as follows:

Precision (P) = TP/(TP + FP) = 3/(3 + 1) = 0.75.

Recall (R) = TP/(TP + FN) = 3/(3 + 2) = 0.60.

The F1 score is calculated as follows:

F1 score = (2 × P × R)/(P + R) = (2 × 0.75 × 0.60)/(0.75 + 0.60) = 0.667, or 0.67.

C is incorrect because 0.75 is the precision ratio, not the accuracy metric, for the sample of the test set for Dataset XYZ, based on Exhibit 2. The precision score is calculated as follows:

Precision (P) = TP/(TP + FP) = 3/(3 + 1) = 0.75.

考点:Model Training - Performance Evaluation

老师好,我还是没看明白怎么从比较p确定的TP,可否举个例子? 另外。。。。我已经发现几个题目的图片在移动终端都不能显示。。。。。还不是网速问题,能不能给修正一下?